In this work, we propose an ID-preserving talking head generation framework, which advances previous methods in two aspects. First, as opposed to interpolating from sparse flow, we claim that dense landmarks are crucial to achieving accurate geometry-aware flow fields. Second, inspired by face-swapping methods, we adaptively fuse the source identity during synthesis, so that the network better preserves the key characteristics of the image portrait. Although the proposed model surpasses prior generation fidelity on established benchmarks, to further make the talking head generation qualified for real usage, personalized fine-tuning is usually needed. However, this process is rather computationally demanding that is unaffordable to standard users. To solve this, we propose a fast adaptation model using a meta-learning approach. The learned model can be adapted to a high-quality personalized model as fast as 30 seconds. Last but not the least, a spatial-temporal enhancement module is proposed to improve the fine details while ensuring temporal coherency. Extensive experiments prove the significant superiority of our approach over the state of the arts in both one-shot and personalized settings.

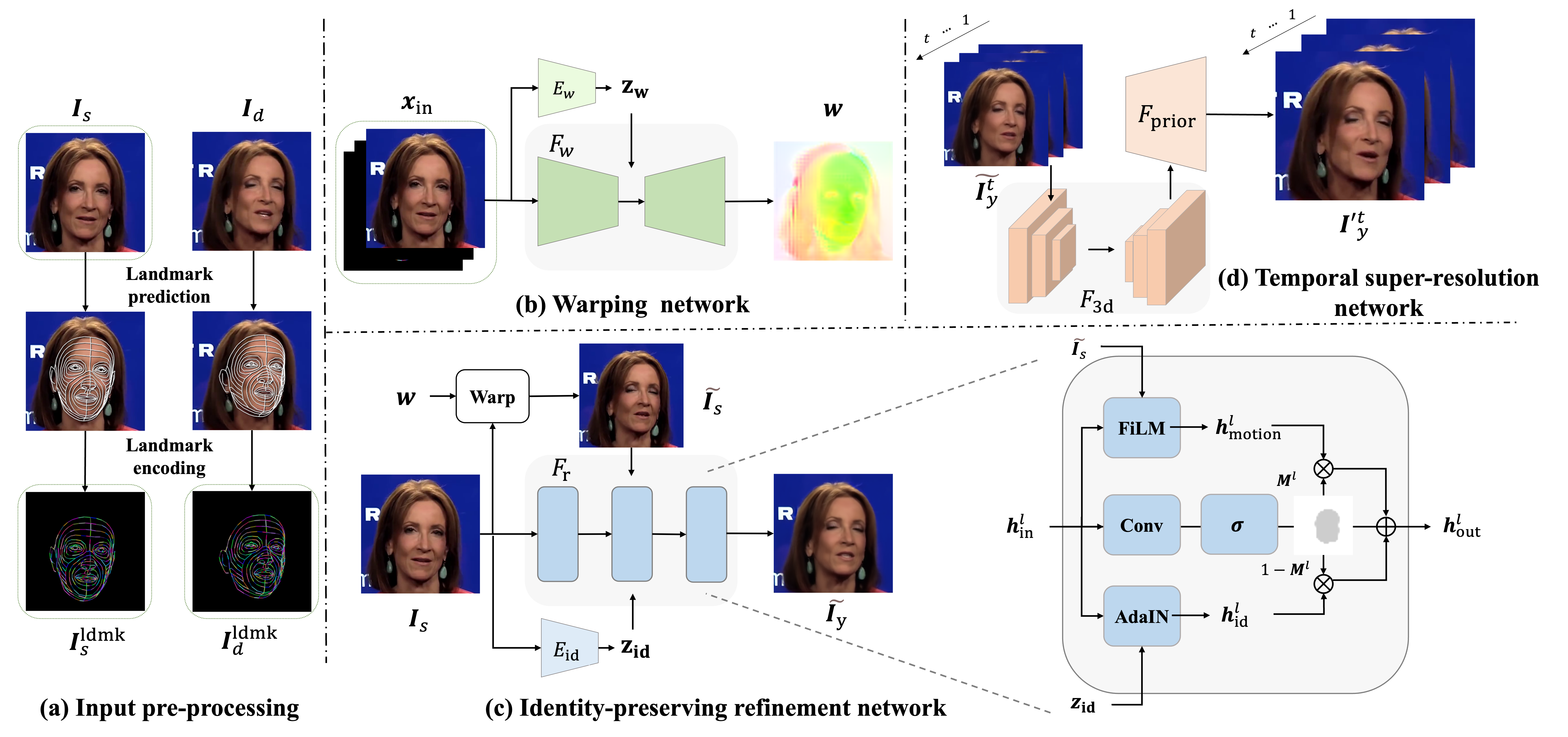

Given a source image and a driving video, we first extract their dense landmarks using a pretrained landmark detector. Then, we estimate warping flows between the source image and each driving frame according to concatenated input. We further refine the warped source input using an ID-preserving network. Finally, we enhance and upsample the 256x256 results to high-fidelity output in 512x512.

@article{zhang2022metaportrait,

title={MetaPortrait: Identity-Preserving Talking Head Generation with Fast Personalized Adaptation},

author={Bowen Zhang and Chenyang Qi and Pan Zhang and Bo Zhang and HsiangTao Wu and Dong Chen and Qifeng Chen and Yong Wang and Fang Wen},a

journal={arXiv:2212.08062},

year={2022},

}